Patch Net-next Bnx2x: Revising Locking Scheme For Mac

- Patch Net-next Bnx2x Revising Locking Scheme For Mac

- Patch Net-next Bnx2x Revising Locking Scheme For Mac Terminal

- Patch Net-next Bnx2x Revising Locking Scheme For Mac Hard Drive

Contents. 1. Important features (AKA: the cool stuff) 1.1. Memory Resource Controller Recommended LWN article (somewhat outdated, but still interesting): The memory resource controller is a cgroups-based feature. Cgroups, aka 'Control Groups', is a feature that was merged in, and its purpose is to be a generic framework where several 'resource controllers' can plug in and manage different resources of the system such as process scheduling or memory allocation. It also offers a unified user interface, based on a virtual filesystem where administrators can assign arbitrary resource constraints to a group of chosen tasks.

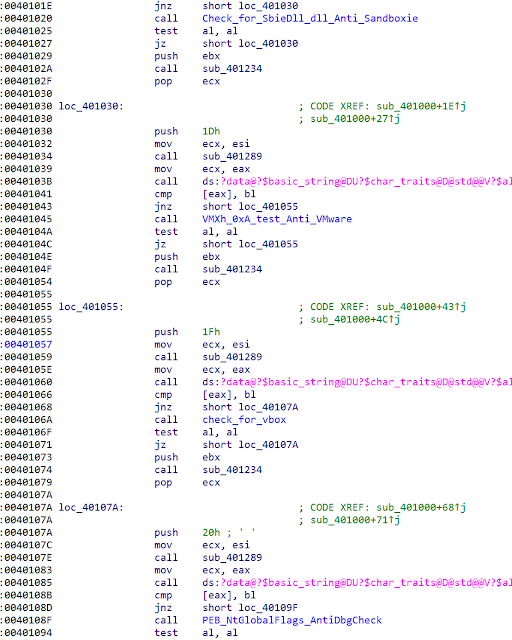

- Commit dff173d ('bnx2x: Fix statistics locking scheme') changed the bnx2x locking around statistics state into using a mutex - but the lock is being accessed via a timer which is forbidden. [If compiled with CONFIG_DEBUG_MUTEXES, logs show a warning about accessing the mutex in interrupt context] This moves the implementation into using a.

- Memory contents when reading from the /dev/vhost-net device file. Bnx2x: Collect the device debug information during Tx timeout. Cxgbit: call neigh_event_send() to update MAC address (bsc#1097585. Fix Patch-mainline header - fix kabi. I40e: hold the RTNL lock while changing interrupt schemes (bsc#1101816).

For example, in they merged two resource controllers: Cpusets and Group Scheduling. The first allows to bind CPU and Memory nodes to the arbitrarily chosen group of tasks, aka cgroup, and the second allows to bind a CPU bandwidth policy to the cgroup. The memory resource controller isolates the memory behavior of a group of tasks -cgroup- from the rest of the system. It can be used to:. Isolate an application or a group of applications. Memory hungry applications can be isolated and limited to a smaller amount of memory. Create a cgroup with limited amount of memory, this can be used as a good alternative to booting with mem=XXXX.

Virtualization solutions can control the amount of memory they want to assign to a virtual machine instance. A CD/DVD burner could control the amount of memory used by the rest of the system to ensure that burning does not fail due to lack of available memory. The configuration interface, like all the cgroups, is done by mounting the cgroup filesystem with the '-o memory' option, creating a randomly-named directory (the cgroup), adding tasks to the cgroup by catting its PID to the 'task' file inside the cgroup directory, and writing values to the following files: 'memory.limitinbytes', 'memory.usageinbytes' (memory statistic for the cgroup), 'memory.stats' (more statistics: RSS, caches, inactive/active pages), 'memory.failcnt' (number of times that the cgroup exceeded the limit), and 'memcontroltype'. OOM conditions are also handled in a per-cgroup manner: when the tasks in the cgroup surpass the limits, OOM will be called to kill a task between all the tasks involved in that specific cgroup.

Code: (commit, ) 1.2. Real Time Group scheduling Group scheduling is a feature introduced in. It allows to assign different process scheduling priorities other than nice levels. For example, given two users on a system, you may want to to assign 50% of CPU time to each one, regardless of how many processes is running each one (traditionally, if one user is running f.e. 10 cpu-bound processes and the other user only 1, this last user would get starved its CPU time), this is the 'group tasks by user id' configuration option of Group Scheduling does. You may also want to create arbitrary groups of tasks and give them CPU time privileges, this is what the 'group tasks by Control Groups' option does, basing its configuration interface in cgroups (feature introduced in 2.6.24 and described in the 'Memory resource controller' section).

Ruby: Fix Ruby 1.9 threading locks for wsmc_action_* functions - Ruby. Openwsman#create_doc_from_file to read xml files - Add XmlNode#next to iterate. Otp in case nvm mac is reserved (Stanislaw Gruszka) [1134606] - [netdrv] iwlwifi. Fix statistics locking scheme (Michal Schmidt) [1211695] - [netdrv] bnx2x: Fix.

Those are the two working modes of Control Groups. Additionally there're several types of tasks. What 2.6.25 adds to Group Scheduling is the ability to also handle real time (aka SCHEDRT) processes. This makes much easier to handle RT tasks and give them scheduling guarantees. Documentation: Code: (commit, ) There's serious interest in running RT tasks on enterprise-class hardware, so a large number of enhancements to the RT scheduling class and load-balancer have been merged to provide optimum behaviour for RT tasks.

Code: (commit, ) 1.3. RCU Preemption support Recommended LWN article: is a very powerful locking scheme used in Linux to scale to number of CPUs on a single system. However, it wasn't well suited for low latency,RT-ish workloads, and some parts could cause high latency. In 2.6.25, RCU can be preempted, eliminating that source of latencies and making Linux a bit more RT-ish. Code: (commit, ) 1.4.

FIFO ticket spinlocks in x86 Recommended LWN article: In certain workloads, spinlocks can be unfair, ie: a process spinning on a spinlock can be starved up to 1,000,000 times. Usually starvation in spinlocks is not a problem, and it was thought that it was not too important because such spinlock would become a performance problem before any starvation is noticed, but testing has showed the contrary. And it's always possible to find an obscure corner case that will generate a lot of contention on some lock, and the processor that will grab the lock does it randomly. With the new spinlocks, the processes grab the spinlock in FIFO order, ensuring fairness (and more importantly, guaranteeing to some point the Spinlocks configured to run on machines with more than 255 CPUs will use a 32-bit value, and 16 bits when the number of CPUs is smaller (as a bonus, the maximum theoretical limit of CPUs that spinlocks can support is raised up to 65536 processors) Code: (commit, ) 1.5.

Better process memory usage measurement Recommended LWN article: Measuring how much memory processes are using is more difficult than it looks, specially when processes are sharing the memory used. Features like /proc/$PID/smaps (added in ) help, but it has not been enough.

2.6.25 adds new statistics to make this task easier. A new /proc/$PID/pagemaps file is added for each process. In this file the kernel exports (in binary format) the physical page localization for each page used by the process.

Comparing this file with the files of other processes allows to know what pages they are sharing. Another file, /proc/kpagemaps, exposes another kind of statistics about the pages of the system. The author of the patch, Matt Mackall, proposes two new statistic metrics: 'proportional set size' (PSS) - divide each shared page by the number of processes sharing it; and 'unique set size' (USS) (counting of pages not shared). The first statistic, PSS, has also been added to each file in /proc/$PID/smaps. In you can find some sample command line and graphic tools that exploits all those statistics.

Code: (commit, ) 1.6. Timerfd syscall timerfd is a feature that got merged in 2.6.22 but was disabled due to late complaints about the syscall interface. Its purpose is to extend the timer event notifications to something else than signals, because doing such things with signals is hard. Poll/epoll only covers file descriptors, so the options were a BSDish kevent-like subsystem or delivering time notifications via a file descriptor, so that poll/epoll could handle them. There were implementations for both approaches, but the cleaner and more 'unixy' design of the file descriptor approach won.

Patch Net-next Bnx2x Revising Locking Scheme For Mac

In 2.6.25, a revised API has been finally introduced. The API can be found Code: 1.7. SMACK, Simplified Mandatory Access Control Recommended LWN article: The most used MAC solution in Linux is SELinux, a very powerful security framework. Is an alternative MAC framework, not so powerful as SELinux but simpler to use and configure. Linux is all about flexibility, and in the same way it has several filesystems, this alternative security framework doesn't pretends to reemplaze SELinux, it's just an alternative for those who find it more suited to its needs.

From the LWN article: Like SELinux, Smack implements Mandatory Access Control (MAC), but it purposely leaves out the role based access control and type enforcement that are major parts of SELinux. Smack is geared towards solving smaller security problems than SELinux, requiring much less configuration and very little application support. Latencytop Recommended LWN article: Slow servers, Skipping audio, Jerky video - everyone knows the symptoms of latency. But to know what's really going on in the system, what's causing the latency, and how to fix it. Those are difficult questions without good answers right now. LatencyTOP is a Linux tool for software developers (both kernel and userspace), aimed at identifying where system latency occurs, and what kind of operation/action is causing the latency to happen.

By identifying this, developers can then change the code to avoid the worst latency hiccups. There are many types and causes of latency, and LatencyTOP focus on type that causes audio skipping and desktop stutters. Specifically, LatencyTOP focuses on the cases where the applications want to run and execute useful code, but there's some resource that's not currently available (and the kernel then blocks the process). This is done both on a system level and on a per process level, so that you can see what's happening to the system, and which process is suffering and/or causing the delays. You can find the latencytop userspace tool, including screenshots, at. BRK and PIE executable randomization is a Red Hat that was started in 2003 by Red Hat to implement several security protections and is mainly used in Red Hat and Fedora. Many features have already been merged lot of time ago, but not all of them.

In 2.6.25 two of them are being merged: brk randomization and PIE executable randomization. Those two features should make the address space randomization on i386 and x8664 complete. Code (commit, ) 1.10. Controller area network (CAN) protocol support Recommended LWN article: From the: Controller Area Network (CAN or CAN-bus) is a computer network protocol and bus standard designed to allow microcontrollers and devices to communicate with each other and without a host computer. This implementation has been contributed by Volkswagen.

Code: (commit, ) 1.11. ACPI thermal regulation/WMI In 2.6.25 ACPI adds thermal regulation support (commit, ) and a WMI (, a proprietary extension to ACPI) mapper (commit, ) 1.12. EXT4 update Recommended article: EXT4 mainline snapshot gets an update with a bunch of features: Multi-block allocation, large blocksize up to PAGESIZE, journal checksumming, large file support, large filesystem support, inode versioning, and allow in-inode extended attributes on the root inode. These features should be the last ones that require on-disk format changes.

Other features that don't affect the disk format, like delayed allocation, have still to be merged. Code: (commit, ) 1.13. MN10300/AM33 architecture support The MN10300/AM33 architecture is now supported under the 'mn10300' subdirectory. 2.6.25 adds support MN10300/AM33 CPUs produced by MEI.

It also adds board support for the ASB2303 with the ASB2308 daughter board, and the ASB2305. The only processor supported is the MN103E010, which is an AM33v2 core plus on-chip devices. TASKKILLABLE Most Unix systems have two states when sleeping - interruptible and uninterruptible. 2.6.25 adds a third state: killable. While interruptible sleeps can be interrupted by any signal, killable sleeps can only be interrupted by fatal signals.

Patch Net-next Bnx2x Revising Locking Scheme For Mac Terminal

The practical implications of this feature is that NFS has been converted to use it, and as a result you can now kill -9 a task that is waiting for an NFS server that isn't contactable. Further uses include allowing the OOM killer to make better decisions (it can't kill a task that's sleeping uninterruptibly) and changing more parts of the kernel to use the killable state. If you have a task stuck in uninterruptible sleep with the 2.6.25 kernel, please contact with the output from $ ps -eo pid,stat,wchan:40,comm grep D Code: Commits 1-11 are prep-work. Patches 15 and 21 accomplish the major user-visible features, but depend on all the commits which have gone before them.

(commit, ) 2. Subsystems 2.1. Various. Block/VFS. IO context sharing.

Changing Service Console IP Address in ESX 3.5 Actually this is not that difficult, but remember you will require console access to the server. Be sure to put the machine in Maintenance Mode then Disconnect it from Virtual Center. It is 9am Pacific Time on Tuesday, July 12th 2011 and I sure hope you’re tuned into the vmware Mega Launch so greatly titled “Raising the Bar, Part V”. If you’re not watching the live broadcast, stop right here and tune into it by, then come back and read this post. Spoiler alert reading beyond this point talks about amazing updates and new features from vmware!

This by far has to be the most exciting launch in the history of vmware, not only are we getting an update to the vSphere product suite that has hundreds if not thousands of enhancements and new features, we’re also getting updates to other great products like vCloud Director, vShield and SRM. In fact, there are so many changes and so much new great things to talk about I can’t do it all in one post. So I’ve decided that I will need to break these up into multiple posts, each with deep detail. I’ll release this posts as quickly as I can write them, but until I have them completed I want to provide you with some of the great core details from this mega launch. So first off get ready for another new term from vmware, Cloud Infrastructure and Management. To sum it up, CIM basically includes vSphere (ESXi), vCenter, vShield and vCloud Director as a single package/methodology called CIM.

These are all of the building blocks necessary to build a robust, elastic and efficient hybrid cloud. I have a feeling we’re going to hear a lot about how vSphere 5 along with the other above mention products are the industry best pieces for running a Cloud Infrastructure. On a side tangent, there is so much discussion on the cloud you wouldn’t believe it. On an almost daily basis I’m meeting with customers to discuss their “Cloud Strategy”. Customers want Hybrid Cloud computing and with these latest updates that I’m going to discuss I think we’re finally at a place where we truly can have application and data mobility, moving our workloads fluidly across our own data-centers in an automated load balanced fashion, from compute to now storage, as well as being pushed out to external hosting (cloud) providers for extreme elasticity as well as fault tolerant (BC/DR) infrastructure. Ok, so lets get started on all of these updates! VSphere 5 (including ESXi 5.0) First off, everyone should already know but if you do not, there is no longer Classic ESX with the traditional Service Console.

Vmware stated that version 4.1 would be their last release of the Classic ESX install and now with version 5.0 there is only ESXi. Performance – There have been a number of enhancements to the core vmware enterprise hypervisor, in this latest release we’ll see huge performance improvements to the vmkernel but as well as in Virtual Machine density.

ESXi hosts can support up to 512 virtual machines on 160 logical CPUs with up to 2TB of RAM, while Virtual Machines can now scale to 32 vCPUs with 1000GB of Memory and have been tested to push 1,000,000 IOPs. What this basically means is there shouldn’t be any performance related reason why you cannot virtualize any workload. The most demanding workloads are being virtualized such as Oracle RAC, Microsoft SQL, SAP and Exchange 2010. Image Builder – this is a new utility built upon PowerCLI that allows you to create custom ESXi builds, it allows you to inject ESXi VIBs, Driver VIBs and OEM VIBs to create an installable or PXE boot-able (I’ll explain why shortly) ESXi image.

If you’re unaware of what a VIB is, it stands for vmware Infrastructure Bundle and you can think of it almost as a bundle. Auto Deploy – Think UCS Service Profile but at the O/S level. There isn’t any hardware abstraction for moving an existing ESXi image between different hardware, but with Auto Deploy you can quickly and easily create stateless ESXi servers with no disk dependency. To sum it up, you PXE boot your server, the ESXi image is loaded into host memory from the Auto Deploy server, its configuration is applied using an answer file as well as host profile and that host is then connected/placed into vCenter. Hose something? A simple reboot will give you a fresh ESXi image in a matter of minutes.

Need to expand your cluster? Bring up another host and add it to the cluster within minutes. VCenter Virtual Appliance (VCVA) – Whoo Hoo! Looks like that of vCenter Server on Linux finally hit GA! Vmware has released with vSphere 5 a virtual appliance of vCenter Server that is based on Linux! This also includes a feature rich browser based vSphere Client completely built on Adobe Flex, this is not a replacement for the traditional installed vSphere Client but it is a nice move forward in vSphere management. Ahhh, do you remember the MUI?

🙂 High Availability (HA) Completely Rewritten – Way too much to discuss here, but a complete rewrite to the core HA functionality has happened. HA can now leverages multiple communication paths between agents (referred to as FDM or Fault Domain Manager) including network and storage (datastore). HA agents no longer use a Primary/Secondary methodology, during cluster creation a single Master is chosen and each remaining host is a Slave. VMFS5 – Oh my! 64TB datastores anyone with a single easy to use 1M block size?

Along with VAAI 2.0 which includes two new block primitives, Thin Provision Stun (finally!) and Space Reclaim. NFS also doesn’t need to feel left out because we now have Full Clone, Extended Stats and Space Reservation for NFS datastores. We also have a new API called VASA, vStorage APIs for Storage Awareness which will provide a number of enhancements such as profile-driven storage (think EMC FAST-VP being integrated with vSphere). Quickly back to VAAI 2.0, Thin Provision Stun will protect your virtual machines if your datastore runs out of space and Space Reclaim will use SCSI UNMAP instead of WRITE ZERO to remove space, this will allow the array to release those blocks of data back to the free pool. Storage DRS (SDRS) – DRS load balancing Virtual Machines across hosts is to SDRS performing Storage vMotion on VMDKs for better performance, capacity utilization, etc. This also includes initial placement as well as allowing affinity based rules for VMDKs. SDRS can monitor for capacity utilization as well as I/O metrics (latency) and dynamically balance your VMDKs across multiple datastores.

Storage vMotion – Snapshot support! As well as being able to move around Linked Clones. There has also been some core enhancements to make things faster and more consistent. VSphere Storage Appliance (VSA) – It is what it sounds like, a virtual storage appliance that allows SMB customers to use local disk on the ESXi host presented out as an NFS datastore to the vSphere Cluster. There is replication technology behind it so if you do lose an ESXi host you will not lose data nor will you lose connectivity to your virtual machines.

This is meant for up to 3 ESXi hosts and is really tailored for the SMB or ROBO market. There is so much more in vSphere 5, but like I said I wanted to just give a brief overview at this time. Site Recovery Manager 5 Host Based Replication – New feature within SRM5, no longer is SAN storage/replication required for SRM. You can now replicate your data host based for disaster recovery scenarios in your virtual environment.

Key takeaways, replication between heterogeneous datastores and it is managed as a property of the virtual machine. Powered-off VMs are not replicated, non-critical data (logs, etc) are not replicated. Physical RDMs are not supported. Snapshots work, snapshot is replicated, but VM is recovered with collapse snapshots. Fault Tolerant, Linked Clones and VM Templates are not supported. Automated Failback – Replication is automatically reversed and with a single click you can failback your virtual machines from your disaster site to your production site. This is huge! You have no idea how much of a pain it is to failback a site with SRM, unless you’re using the 🙂 Misc – Completely new interface, still within the vSphere Client as a plug-in but now you can manage it all from a single UI, no need to use two clients or a linked mode vCenter. VCloud Director 1.5 Tons of new APIs within vCloud Director 1.5, including vCloud Orchestration via a vCenter Orchestrator module.

Supported for Linked Clones is a huge leap forward, you can now deploy vApps in a matter of seconds with minimal storage consumption. Microsoft SQL is now supported as a back-end database which will make standing up a vCD instance in your lab a lot easier because you won’t need to worry about an Oracle database:). There is also support for federated multi-vClouds by linking vCD instances as well as enhanced vShield integration specifically around IPSec VPN. Are you still awake? 1170+ words into this post and I’m still not complete.and this is just the brief overview!

Vmware you really outdid yourself! VShield 5 vShield Edge – provides us with true multi-tenant site separation complete with VPN capabilities, DHCP, Stateful Firewall and now Static Routing within vShield Edge 5.0. VShield App – gives us layer2/3 protection with VM-level enforcement now with group based policies found in vShield App 5.0 as well as enabling multiple trust zones on the same vSphere cluster. Layer 2 protection coupled with APIs enable automatic quarantining of compromised VMs. VShield Data Security – is a new member of the vShield family that allows you to monitor virtual machines continuously and completely transparent to the VM for compliance such as PCI, PHI, PII and HIPAA to name a few.

Patch Net-next Bnx2x Revising Locking Scheme For Mac Hard Drive

VShield Manager – Enterprise roles found in Manager 5.0 now provide the separation of duties required by some security and compliance standards. So there you have it. A brief 1706 word blog post covering just the high-level details of the vmware mega launch. Like I said earlier, I’m going to try to focus in on some deep-dive details on some of the major topics above. But until then, read up as much as you can on the vmware website and hopefully relatively soon the bits will be available for public consumption so you can get all of this great fresh new code in your lab!

Posted under, This post was written by on July 12, 2011 Tags:, Just received notice this morning that EMC VNX Replicator has been approved for support for VMware Site Recovery Manager 4.0.x and 4.1.x. An excerpt of the message is below: VMware ESX 4.1 Patch ESX401-SG: Updates vmkernel64, VMX, CIM Details Release date: November 15, 2010.